At the 2025 CES event, Nvidia unveiled a new desktop computer developed in collaboration with MediaTek. This $3,000 system, named “Project DIGITS,” features a cut-down Arm-based Grace CPU and the Blackwell GPU Superchip. It offers new capabilities aimed at both the AI and high-performance computing (HPC) markets.

Project DIGITS is powered by Nvidia’s new GB10 Grace Blackwell Superchip, which has 20 Arm cores. The system is designed to deliver “petaflop” AI computing performance at FP4 precision, making it suitable for prototyping, fine-tuning, and running large AI models.

Since the release of the G8x line of video cards in 2006, Nvidia has been instrumental in providing CUDA tools and libraries for GPUs, fostering a vibrant ecosystem for AI applications. With the growing demand for high-performance GPUs, the DIGITS project is expected to make AI software development more accessible, especially for low-cost GPU users. The ability to run and fine-tune open transformer models (such as Llama) on a desktop is particularly appealing to developers. For example, with 128GB of memory, the DIGITS system helps overcome the limitations of many consumer-grade GPUs, which typically offer only 24GB of memory.

The GB10 Superchip in the DIGITS system combines an Nvidia Blackwell GPU with the latest CUDA cores and fifth-generation Tensor Cores. These are connected to a high-performance Grace-like CPU with 20 power-efficient Arm cores, split between Cortex-X925 and Cortex-A725 cores. The GPU performance of the GB10 is expected to be slightly lower than that of the Grace-Blackwell GB200, which has 72 Arm Neoverse V2 cores and two B200 Tensor Core GPUs.

A standout feature of the DIGITS system is its 128GB of unified, coherent memory shared between the CPU and GPU. This memory size breaks the “GPU memory barrier” for AI and HPC models. For comparison, current market prices for the 80GB Nvidia A100 GPU range from $18,000 to $20,000. The unified memory eliminates the need for PCIe transfers between the CPU and GPU, enhancing performance. The memory is fixed at 128GB, and users cannot expand it. Additionally, the system supports ConnectX networking, Wi-Fi, Bluetooth, and USB connections.

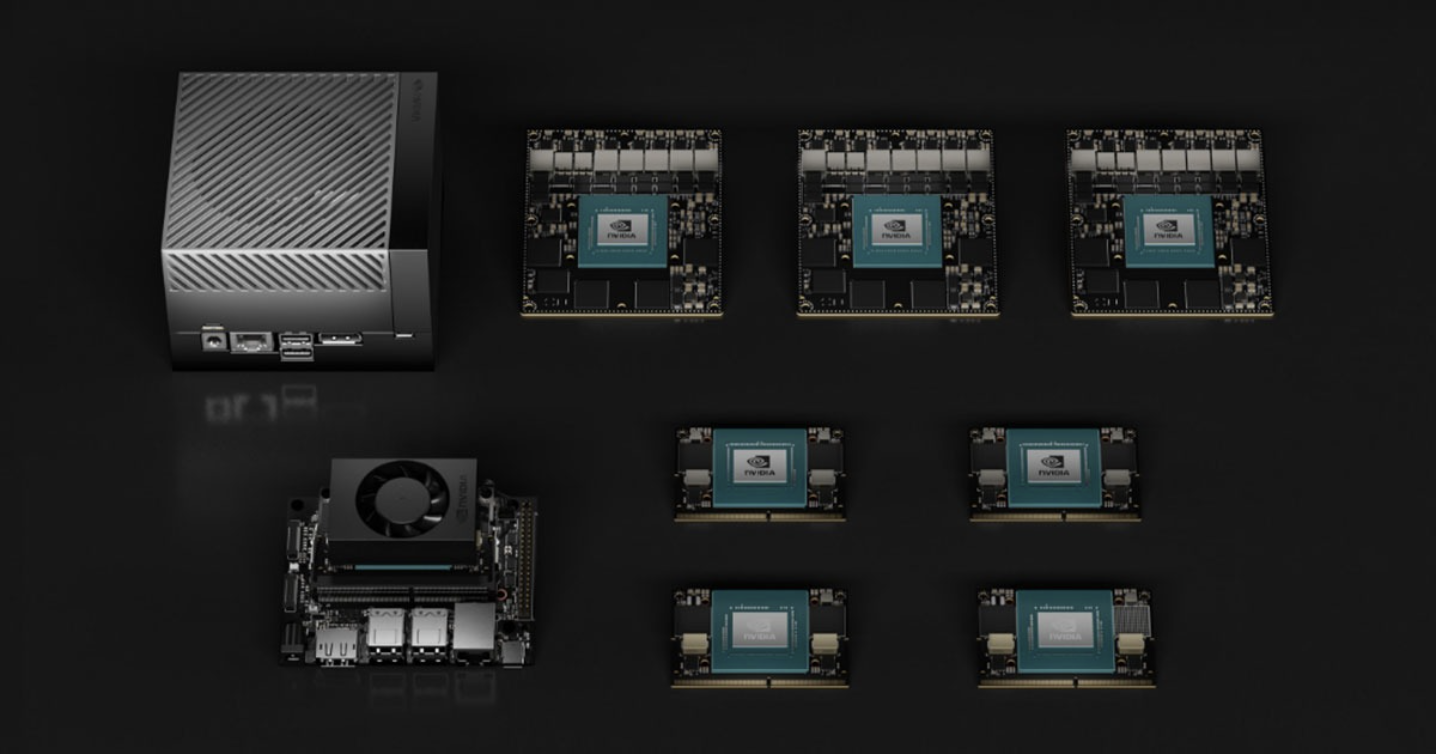

The DIGITS system offers up to 4TB of NVMe storage. Although no specific power requirements were provided, the small size of the system suggests low power consumption. Like the Mac mini, it’s designed to generate minimal heat. Based on images from CES, the system’s case features sponge-like material at the front and back that likely facilitates airflow and acts as a filter. This design suggests that the system is optimized for a cool, quiet, and efficient operation, rather than pushing for maximum performance and power consumption.

Despite its compact size, the DIGITS system is powerful enough to run AI models with up to 200 billion parameters. Nvidia claims that by using ConnectX networking, two Project DIGITS units can be linked together to run models with up to 405 billion parameters. Developers can create and run inference models on their desktops and then seamlessly deploy them on cloud or data center infrastructure.

Nvidia envisions Project DIGITS making AI accessible to millions of developers, researchers, and students, helping shape the future of AI. The system is designed to run quantized large language models (LLMs) locally, not for training but for inference. The advertised performance of one petaFLOP is based on FP4 precision weights, which are more memory-efficient than higher precision formats like FP8 or FP16. For example, a Llama-3-70B model at FP8 precision requires about 70GB of memory, whereas FP4 precision would cut that down to 35GB.

Although Project DIGITS is not Nvidia’s first desk-side system, it stands out from previous models. In 2024, GPTshop.ai introduced a GH200-based desk-side system with higher performance, but at a significantly higher cost. Unlike the DIGITS project, these systems feature the full power of either the GH200 Grace-Hopper Superchip or the GB200 Grace-Blackwell Superchip in a desktop form factor.

Using the DIGITS system for desktop HPC applications could offer an interesting approach. In addition to running larger AI models, the unified CPU-GPU memory is ideal for HPC tasks. For example, a recent HPC study showed that memory capacity was a key factor in enabling a computational fluid dynamics (CFD) simulation on an Intel Xeon processor. Similarly, HPC applications often struggle with the small memory domains of PCIe-attached GPUs. The DIGITS system’s integrated memory could overcome these limitations, offering more efficient performance.

Although benchmarks are still needed to determine its full suitability for desktop HPC, the DIGITS system could serve as an attractive building block for a Beowulf-style cluster. This setup typically requires multiple servers and PCIe-attached GPUs, but a small, fully integrated system with CPU-GPU memory and built-in networking could offer a more balanced, cost-effective alternative. Plus, the system already runs Linux and includes ConnectX networking, making it a versatile option for cluster computing.