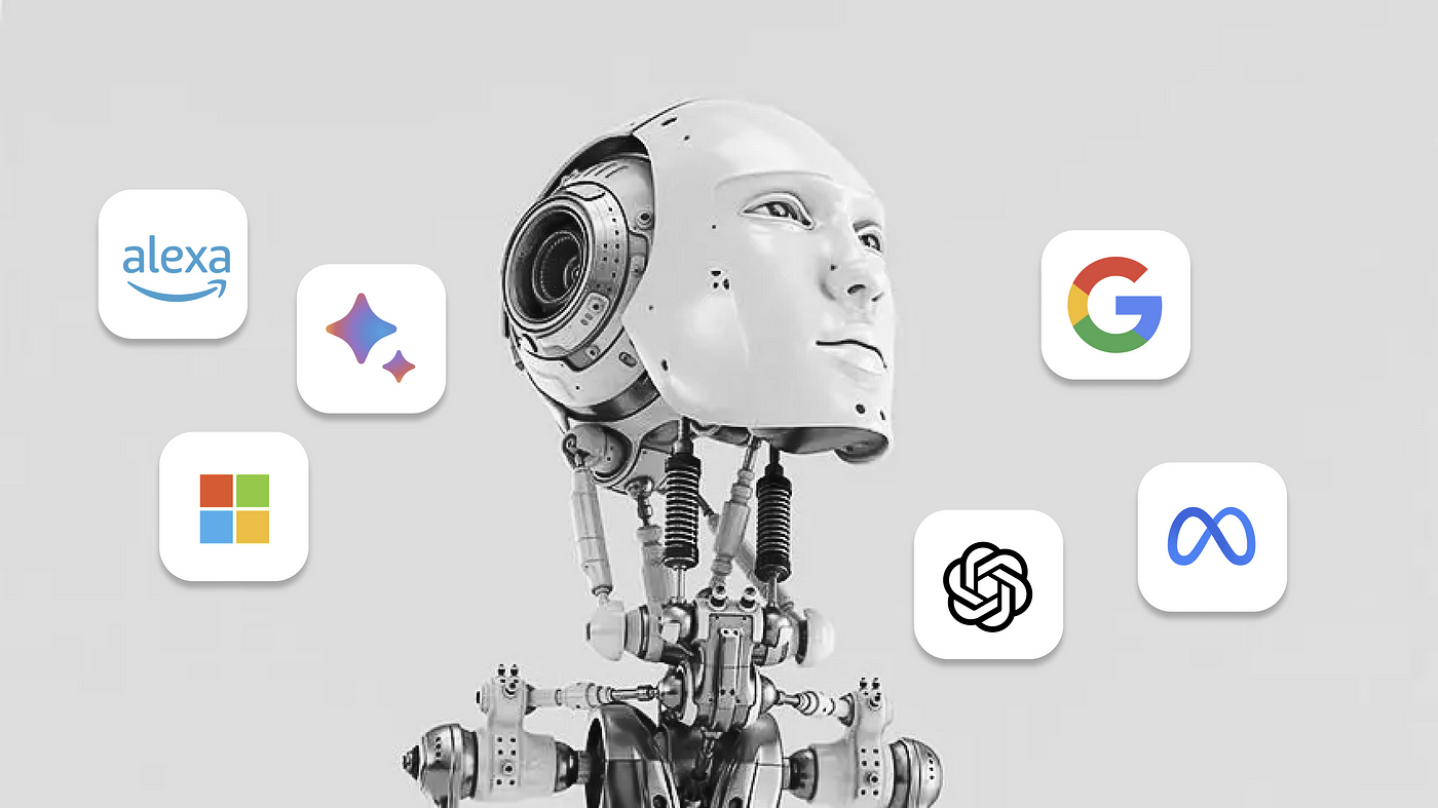

Last week, President Biden met with seven major artificial intelligence companies to discuss voluntary safeguards for AI products and explore future regulatory actions. Following that meeting, four of these companies have come together to form a new coalition focused on promoting responsible AI development and setting industry standards amid growing government and public scrutiny.

The new coalition, called the Frontier Model Forum, includes Anthropic, Google, Microsoft, and OpenAI. The goal of the Forum is to ensure the safe and responsible development of advanced AI models, also referred to as frontier models. These models are expected to perform better than current top-tier models across a wide range of tasks.

The Forum plans to leverage the technical and operational expertise of its members to advance AI safety research, establish technical standards, and build a public library of solutions. Their core objectives include:

- Advancing research on AI safety to promote the responsible development of frontier models, minimize risks, and enable independent, standardized evaluations of AI capabilities and safety.

- Identifying best practices for developing and deploying these models responsibly, helping the public understand their nature, capabilities, and limitations.

- Collaborating with policymakers, academics, civil society, and other companies to share knowledge about AI safety risks.

- Supporting efforts to create applications that tackle major societal challenges, like climate change, early cancer detection, and cybersecurity threats.

The coalition defines “frontier models” as large-scale machine learning models that surpass the abilities of current leading models. The Forum intends to focus on three main areas: identifying best practices, advancing safety research, and sharing information between companies and governments.

Some of the specific areas of research the Forum will focus on include adversarial robustness, interpretability, scalable oversight, and anomaly detection. The group also plans to establish an advisory board to guide its strategy, as well as formal structures such as a charter, governance, and funding.

While the coalition currently consists of only four members, it is open to other organizations involved in developing frontier models. These organizations must show a commitment to the safety of these models through both technical and institutional means and actively participate in the Forum’s initiatives.

President Biden has acknowledged the transformative potential of AI and stressed the importance of ensuring its development remains safe and responsible. He highlighted the need for bipartisan legislation to regulate the collection of personal data, protect democracy, and address the potential impact of AI on jobs and industries. Biden emphasized that a common framework for governing AI development is necessary to ensure safety.

Critics, however, have raised concerns that self-regulation by the major AI players could lead to the formation of monopolies in the tech industry. Shane Orlick, President of AI writing platform Jasper, expressed the need for deeper and ongoing government engagement with AI innovators. He pointed out that AI will affect every aspect of life, and the government must play a role in ensuring the technology is developed responsibly, with a focus on ethics and public safety.

Orlick also warned that regulations must avoid stifling competition and creating monopolies, instead fostering an environment where the entire AI community can contribute to a responsible transformation of society.