The rise of generative AI has significantly increased the demand for GPUs and other forms of accelerated computing. To help businesses scale their computing investments in a more predictable way, Nvidia, along with several server partners, has introduced the Enterprise Reference Architectures (ERA), drawing inspiration from high-performance computing (HPC).

Large language models (LLMs) and other foundational models have sparked a rush for GPUs, with Nvidia emerging as a major beneficiary. In 2023, the company shipped 3.76 million data center GPUs—over a million more than the previous year. The demand has continued into 2024 as companies race to acquire GPUs to support GenAI. This growth has helped push Nvidia to become the world’s most valuable company, with a market cap of $3.75 trillion.

Amidst this surge in demand, Nvidia launched its ERA program, aiming to provide businesses with a clear blueprint to scale their HPC infrastructure in a way that minimizes risk and maximizes efficiency. The goal is to help customers build and manage their GenAI applications more effectively.

Nvidia believes that the ERA program will speed up the time it takes for server manufacturers to bring products to market while enhancing performance, scalability, and manageability. It also focuses on improving security and simplifying complex systems. So far, Dell Technologies, Hewlett Packard Enterprise, Lenovo, and Supermicro have partnered with Nvidia on the ERA program, with additional server makers expected to join.

The company explains that by using technical components from the supercomputing world and combining them with design recommendations based on years of experience, the ERA program aims to eliminate the challenge of building these systems from scratch. It offers flexible, cost-effective configurations that take the guesswork and risk out of the process.

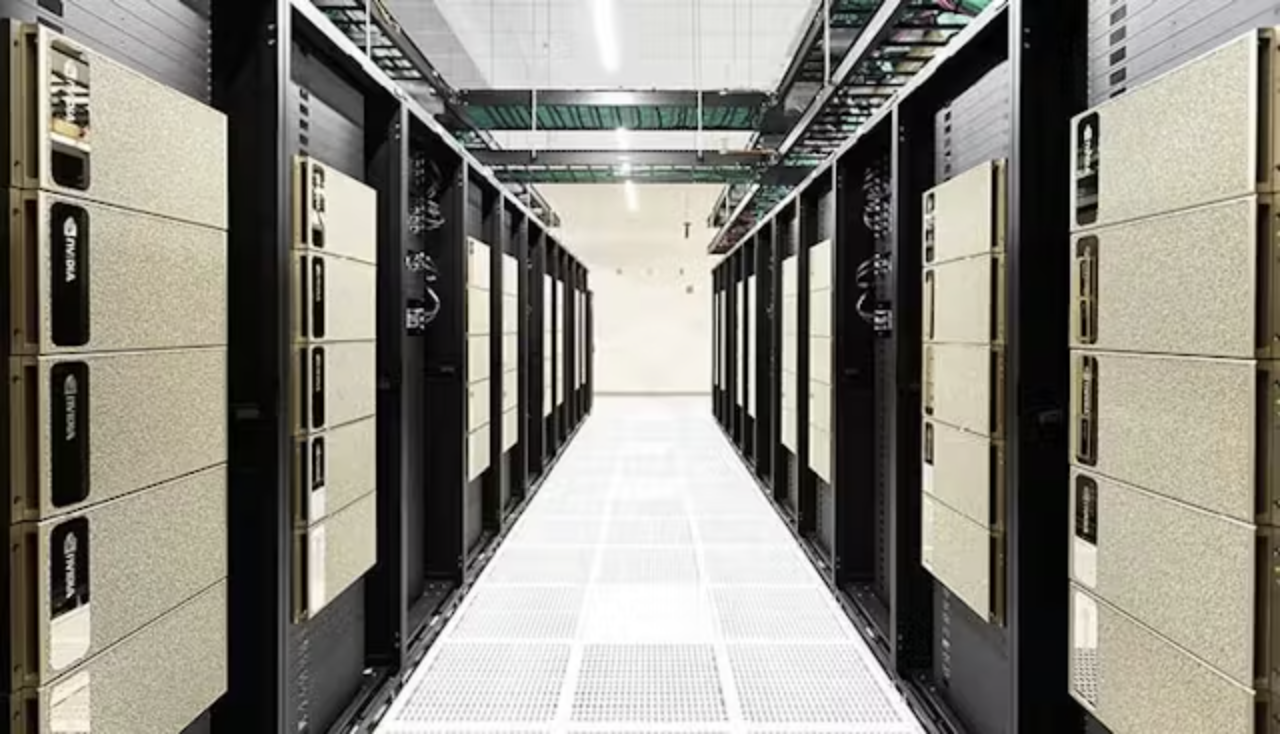

ERA utilizes certified server configurations with GPUs, CPUs, and network interface cards (NICs) that are tested to deliver performance at scale. This includes the Nvidia Spectrum-X AI Ethernet platform and Nvidia BlueField-3 DPUs, among others.

The ERA is designed for large-scale deployments, ranging from four to 128 nodes, with anywhere from 32 to 1,024 GPUs. Nvidia sees this range as the ideal setup for transforming data centers into “AI factories.” This is smaller than Nvidia’s NCP Reference Architecture, which is aimed at even larger-scale foundational model training, starting with a minimum of 128 nodes and scaling up to 100,000 GPUs.

The ERA features different design patterns depending on the size of the cluster. For example, the “2-4-3” design uses a 2U compute node with up to four GPUs, three NICs, and two CPUs. This configuration is suitable for clusters ranging from eight to 96 nodes. Another design, the “2-8-5” pattern, uses 4U nodes with up to eight GPUs, five NICs, and two CPUs. This pattern scales from four to 64 nodes.

By collaborating with server manufacturers on tested, proven architectures, Nvidia helps businesses rapidly and securely build their AI factories.

The company explains that the transformation of traditional data centers into AI factories is changing the way enterprises process and analyze data. By integrating cutting-edge computing and networking technologies, companies can meet the heavy computational demands of AI applications.