At NVIDIA’s 2024 GTC event, held through March 21, several significant announcements were made, including one from CEO Jensen Huang during his keynote. He introduced the next-generation Blackwell GPU architecture, designed to help organizations build and run real-time generative AI on large language models with trillions of parameters.

Huang highlighted that generative AI is shaping a new industry, transforming the way we compute. NVIDIA’s new processor is specifically built for this generative AI era.

In addition to this, NVIDIA revealed plans for the future of data centers. The company shared a blueprint for constructing highly efficient AI infrastructure, with support from key partners like Schneider Electric, Vertiv, and Ansys. NVIDIA demonstrated this new data center as a digital twin using NVIDIA Omniverse, a platform that allows for the creation of 3D applications, tools, and services. They also introduced cloud APIs that let developers integrate Omniverse technologies into existing design and automation software, specifically for digital twins.

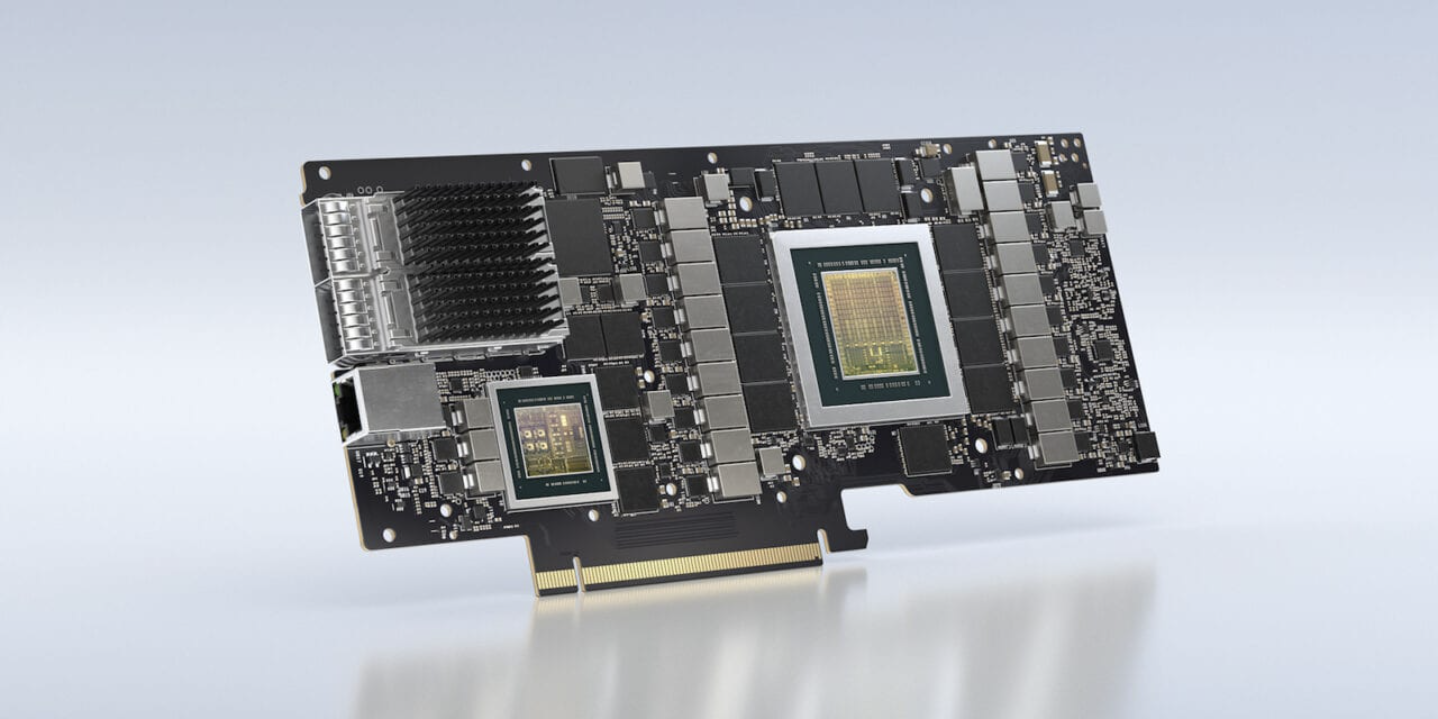

The latest AI supercomputer from NVIDIA is based on the GB200 NVL72 liquid-cooled system, featuring two racks with 18 NVIDIA Grace CPUs and 36 Blackwell GPUs connected by fourth-generation NVLink switches.

One of the key partners in this development, Cadence, introduced its Reality digital twin platform at the event, claiming it to be the first AI-driven digital twin solution for sustainable data center design and modernization. The platform is said to improve data center energy efficiency by up to 30%. During the demonstration, engineers used Cadence’s platform to unify and visualize multiple CAD datasets with greater precision. They also simulated airflows and the performance of the new liquid-cooling systems, with support from Ansys’ software to bring simulation data into the digital twin.

NVIDIA showcased how digital twins can help businesses optimize data center designs before physical construction, allowing teams to better plan for different scenarios and improve efficiency.

Despite the exciting potential of the Blackwell GPU platform, it needs infrastructure to run on, and that’s where the biggest cloud providers come in. Huang mentioned that the entire industry is gearing up for Blackwell, which is already available on AWS. The collaboration between NVIDIA and AWS aims to help customers across all industries unlock new generative AI capabilities. AWS has supported NVIDIA GPU instances since 2010, and last year, Huang appeared with AWS CEO Adam Selipsky at re:Invent.

AWS’s Blackwell stack includes its Elastic Fabric Adapter Networking, EC2 UltraClusters, and AWS Nitro virtualization infrastructure. A notable addition is Project Ceiba, an AI supercomputer collaboration exclusive to AWS, which will also use the Blackwell platform for NVIDIA’s internal R&D team.

Meanwhile, Microsoft is also expanding its partnership with NVIDIA by bringing the GB200 Grace Blackwell processor to Azure. This collaboration marks the first integration of Omniverse Cloud APIs with Azure, with a demo showing how factory operators can view real-time data overlaid on a 3D digital twin of their facilities via Power BI.

AWS and Microsoft are targeting industries like healthcare and life sciences. AWS is working with NVIDIA to enhance AI models for computer-aided drug discovery, while Microsoft aims to drive innovation across clinical research and care delivery with improved efficiency.

Google Cloud is also jumping into the generative AI race, integrating NVIDIA NIM microservices into Google Kubernetes Engine (GKE) to speed up AI deployment for enterprises. Additionally, Google Cloud is making it easier for users to deploy the NVIDIA NeMo framework across its platform using GKE and the Google Cloud HPC Toolkit.

However, not just hyperscalers are jumping on the “next-gen” cloud bandwagon. NexGen Cloud, a sustainable infrastructure provider, has launched Hyperstack, a GPU-as-a-service platform powered entirely by renewable energy. Their flagship offering is the NVIDIA H100 GPU. In September, NexGen Cloud made headlines by announcing a $1 billion European AI supercloud, which will eventually feature more than 20,000 H100 Tensor Core GPUs. The company also revealed that it would include Blackwell-powered compute services as part of this AI supercloud, promising to offer customers the most powerful GPUs on the market to drive innovation and improve efficiency.